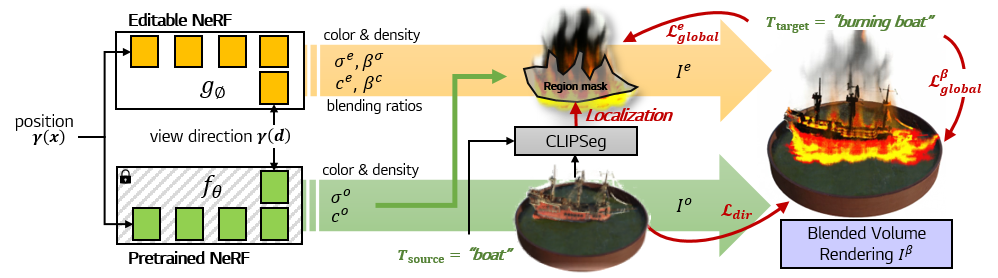

Method Overview

We propose Blending-NeRF, which consists of pretrained NeRF $f_\theta$ for the original 3D model and editable NeRF $g_\phi$ for object editing. The weight parameter $\theta$ is frozen, and $\phi$ is learnable. The edited scene is synthesized by blending the volumetric information of two NeRFs. We use two kinds of natural language prompts: source text $T_{\text{source}}$ and target text $T_{\text{target}}$, describing the original and edited 3D model, respectively. Blending-NeRF performs text-driven editing using the CLIP losses with both prompts. However, using only the CLIP losses is not sufficient for localized editing as it does not serve to specify the target region. Thus, during training, we specify the editing region in the original rendered scene using the source text. Simultaneously, the editable NeRF is trained to edit the target region under the guidance of localized editing objective. For more details, please refer to our paper.